Modification the scrape headers to make it appear like the requests are originating from an internet browser. https://charliepqnl499.hpage.com/post4.html Data scratched can be conveniently exported as a regional file or to a data source. There is no restriction to the amount of information which can be removed and exported. Listings which extend across numerous web pages can be conveniently extracted. All info on Oxylabs Blog is provided on an "as is" basis and for informational objectives just.

American Work-From-Home Rates Drop To Lowest Since the ... - Slashdot

American Work-From-Home Rates Drop To Lowest Since the ....

Posted: Wed, 18 Oct 2023 03:30:00 GMT [source]

This could be due to the fact that you haven't looked after the performance and speed of the formula. You can do some standard math while developing the algorithm. Eliminate the query criteria from the URLs to eliminate identifiers linking requests together. Rotate the IPs with different proxy servers if you require to. You can additionally release a consumer-grade VPN solution with IP turning capacities. If you are aiming to evaluate all the capacities these huge eCommerce platforms have for your company-- contact us.

Amazon Data Scraping Solution

Free of charge individuals, every person will certainly have 1000 free page-scrape credit reports per month with a restriction of 720,000 in total. When you have the HTML code of the target item page, you need to analyze the HTML using BeautifulSoup. It makes it possible for customers to find the data they desire in the analyzed HTML web content. For instance, if you desire all items in a certain category including numerous items, you will certainly need keywords to define the subcategories of each search question. Suppose you intend to scale things up and start with millions of product information today.

Adjustment the scrape headers to make it look like the demands are coming from a web browser and not an item of code. Besides that, the structure of the page may or may not differ for various items. The most awful component is, you might not also foresee this issue springing up and could also encounter some network errors and unknown reactions. To boost the toughness of these logical strategies, you need high-quality trusted data. Step 2.2 - Slow running scrape - Configure Scraper - Establish Wait time to 1000, No. of retry attempts to 1 and Minimum wait prior to scraping 2000.

- If you need providing, you will need tools like Playwright or Selenium.

- Get data from a website - Select the content on the page you want to scratch.

- In this way, even more info can be scraped more often.

- Talk to an Octoparse information specialist currently to review how web scraping services can aid you make best use of initiatives.

- And as soon as whatever is down, a succeed version of the scraped data will be offered to download in the information preview home window shown above.

This is one of the most common technique among scrapers that track products on their own or as a solution. You can recognize a lot if you research item testimonials of both your competitors and your very own. As an example, you may find out what individuals like/dislike most about their items and whether your products have actually satisfied the requirements and wishes of your customers.

Ip Addresses Obstructing

With these sitemaps, Web Scraper will certainly browse the site anyway you want and draw out data which can be later on exported as a CSV. Developing an internet scraper needs specialist coding understanding and is additionally taxing. For non-coders or developers who want to conserve time, internet scuffing expansions and software are much better options. Lots of platforms state in their regards to use that platform information can not be utilized for business objectives. Don't worry, utilizing the data for market research, belief evaluation, rival evaluation, etc, is most likely to be taken into consideration a "reasonable use".

Signal President Says AI is Fundamentally 'a Surveillance Technology' - Slashdot

Signal President Says AI is Fundamentally 'a Surveillance Technology'.

Posted: Tue, 26 Sep 2023 07:00:00 GMT [source]

Demands is a preferred third-party Python collection for making HTTP demands. It provides a straightforward and instinctive user interface to make HTTP requests to internet servers and Maximize your data potential with our services receive responses. This library is perhaps one of the most recognized collection connected to internet scratching.

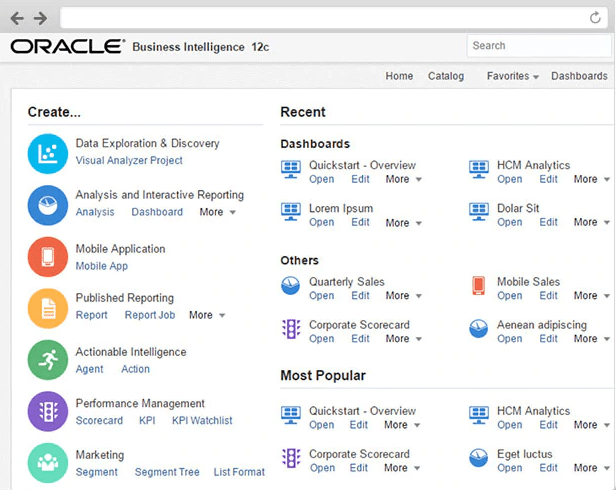

Using content from testimonials will aid you better understand the positives and downsides of products, and then enhance the top quality and customer service. On top of that, scuffing reviews or various other user-generated Custom BI implementation case studies web content might raise extra copyright issues. ParseHub is one more complimentary internet scrape readily available for direct download.

It makes use of a solving failing way to educate the scrape to address this sort of CAPTCHAs in Octoparse. If you save huge checklists or dictionaries in memory, you might put an additional burden on your machine-resources! We recommend you to move your information to permanent storage space areas as soon as possible.

One last thing we might scratch from an item web page is its testimonials. Another note is that if you send out as lots of headers as possible, you will not need Javascript rendering. If you require rendering, you will certainly need tools like Dramatist or Selenium.